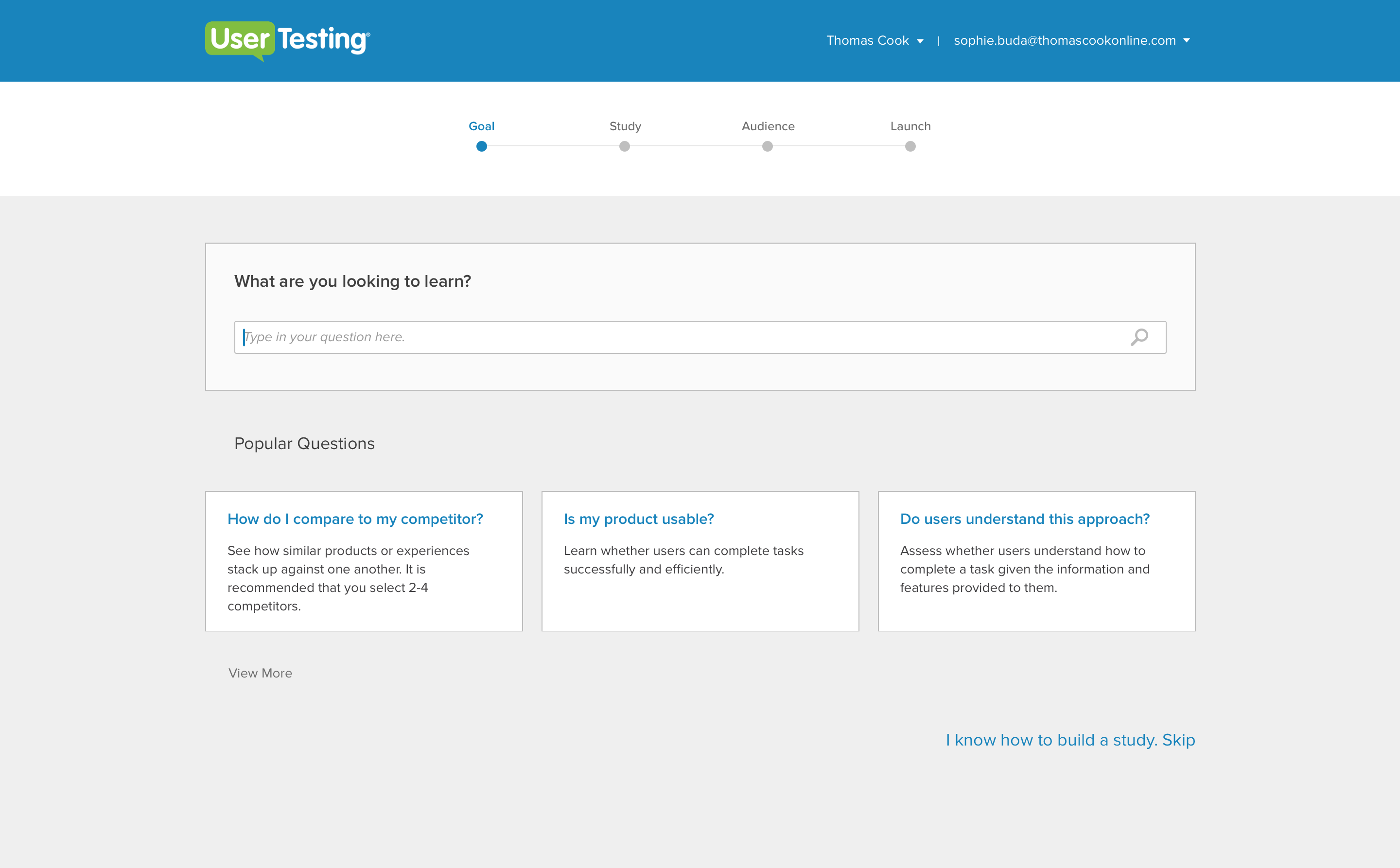

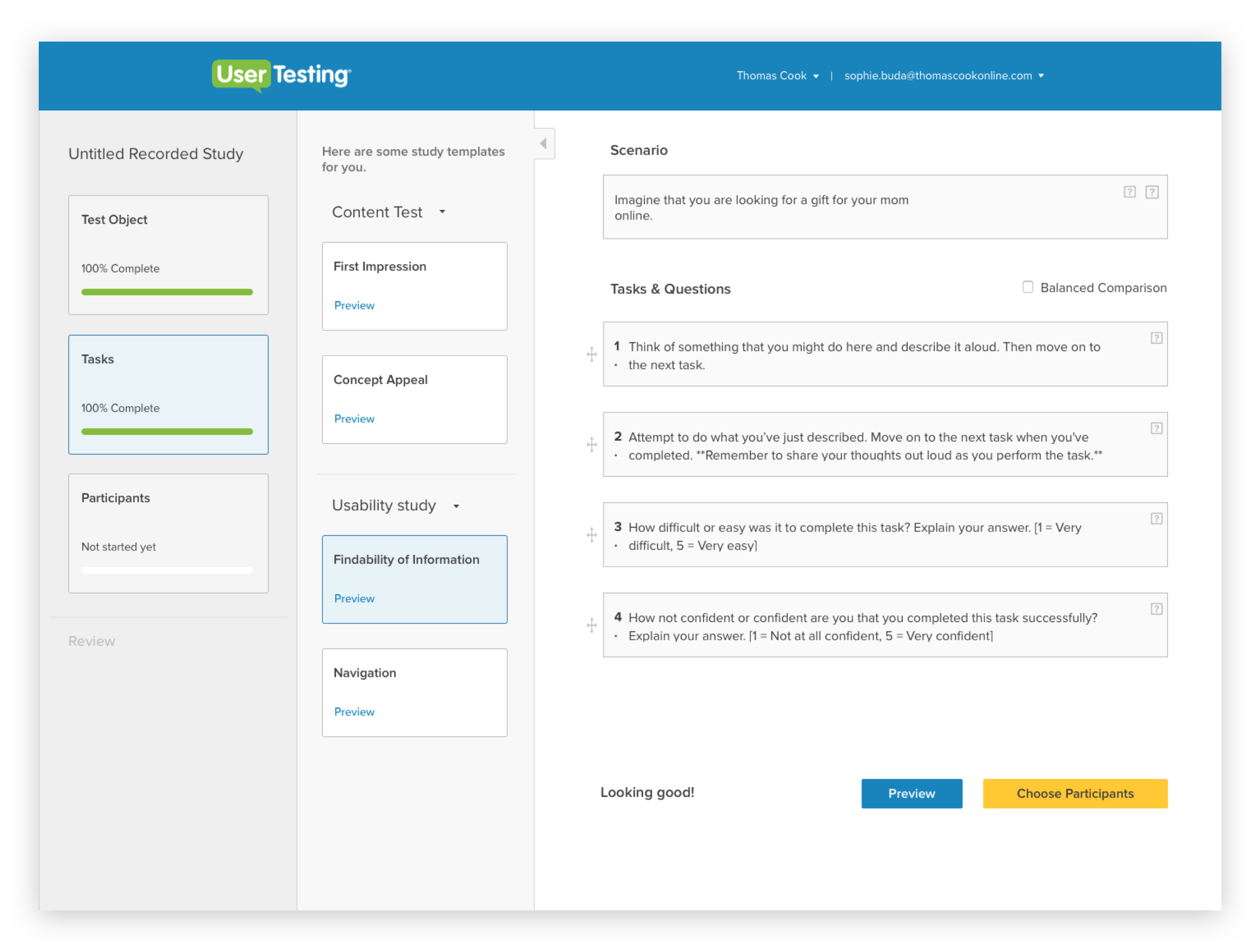

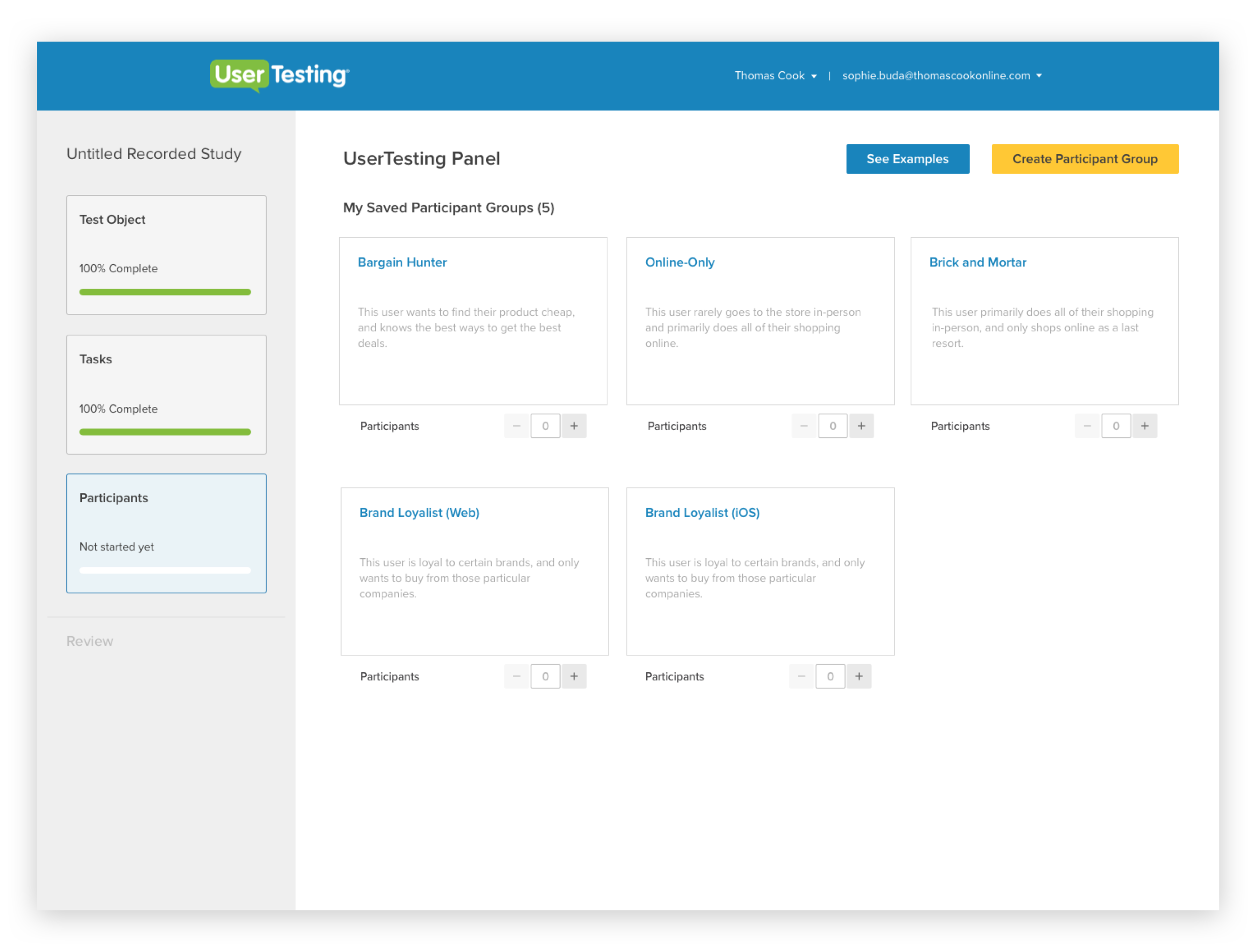

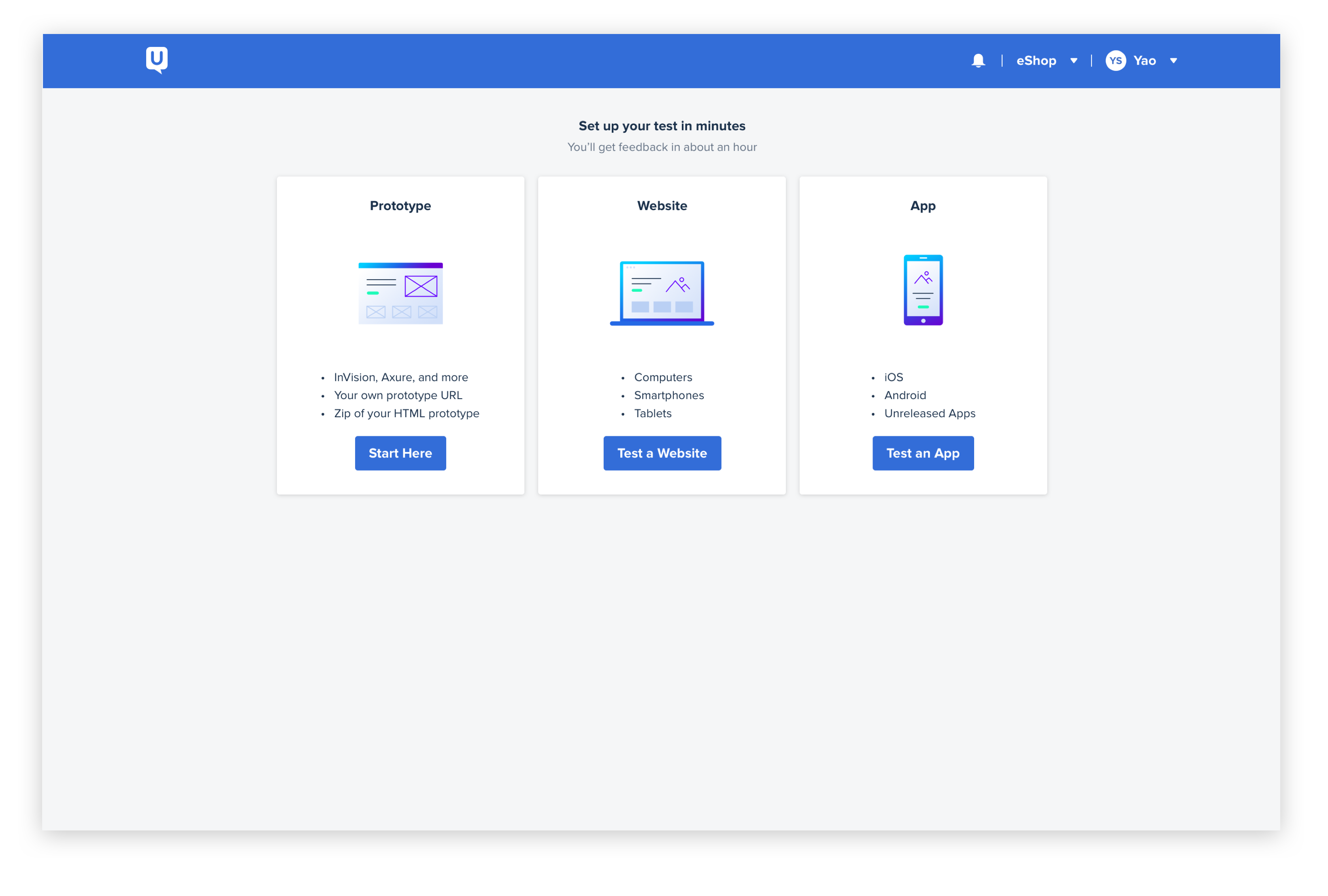

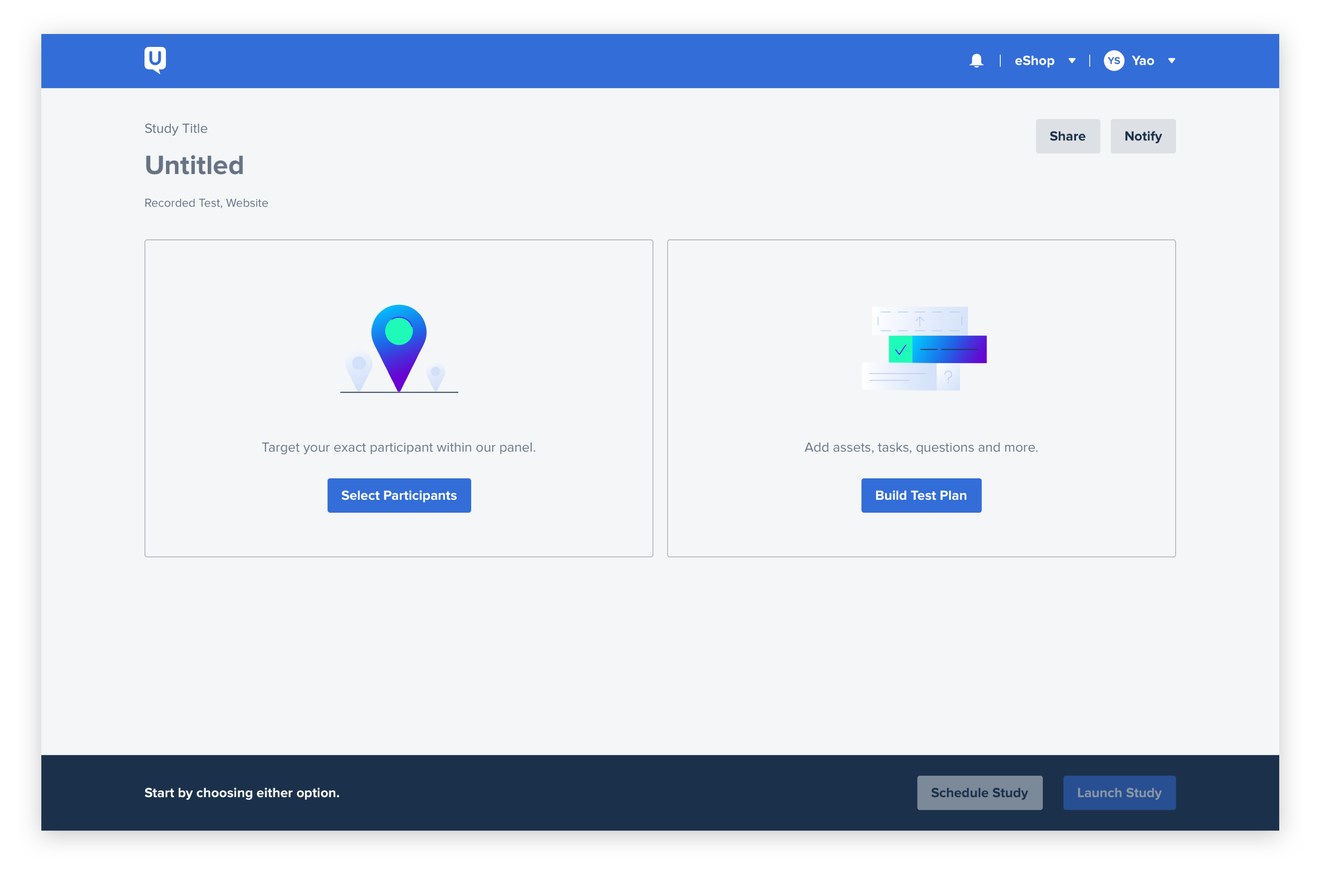

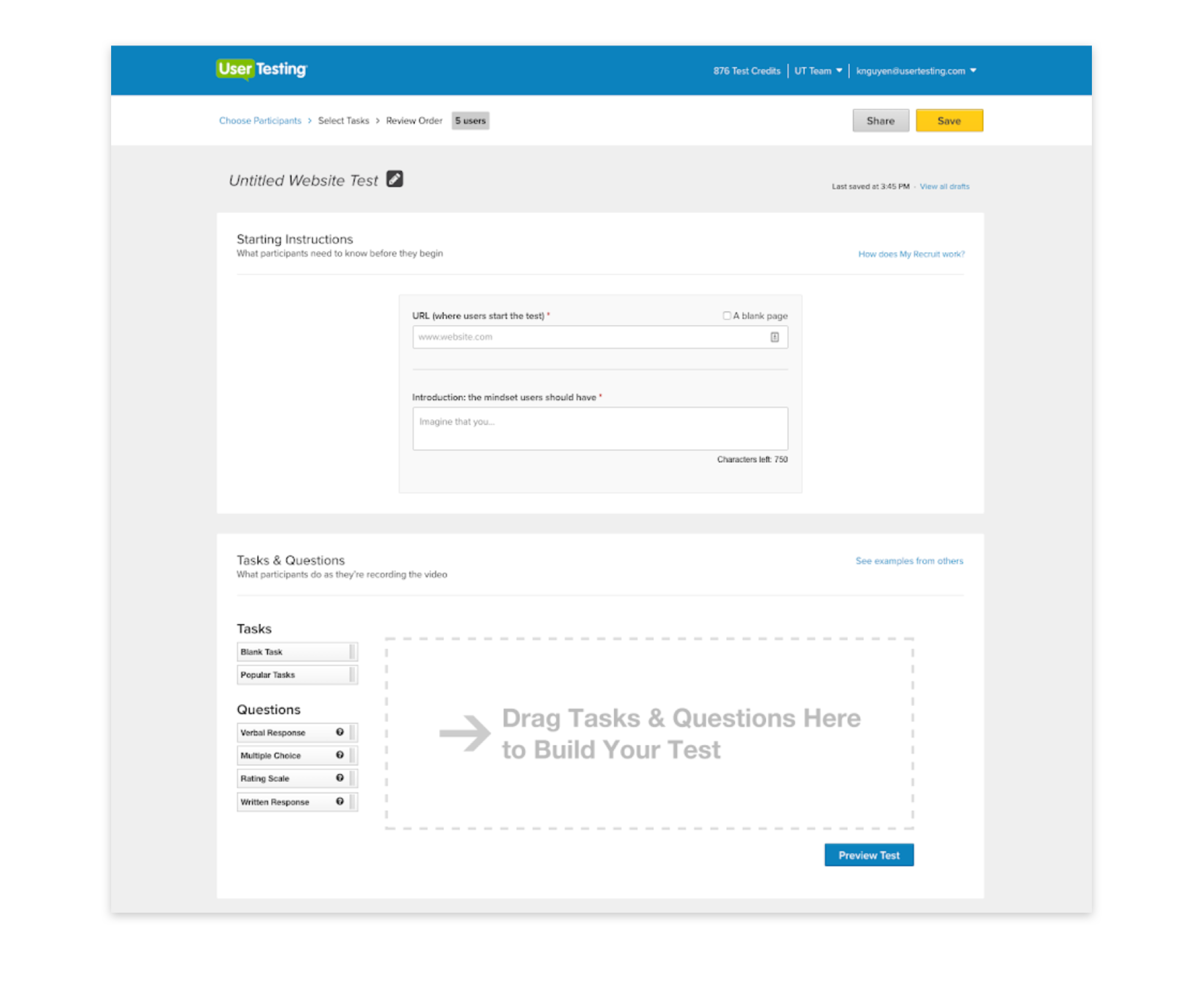

I joined UserTesting in 2017 and sought out to redesign the entire product experience from the ground up as part of design team of 4. The company has been around for literally a decade, but the product experience has remained largely unchanged since 2007.

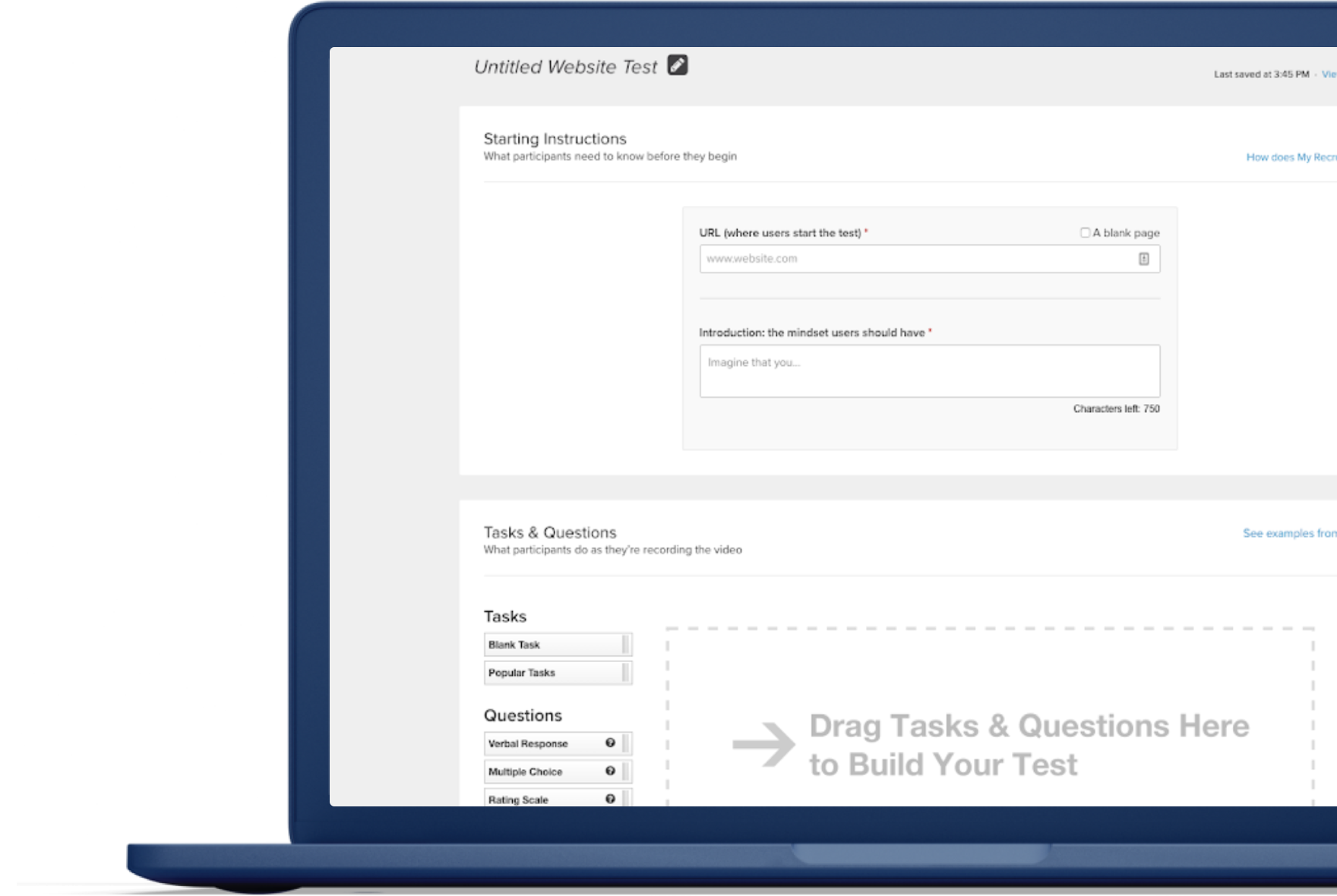

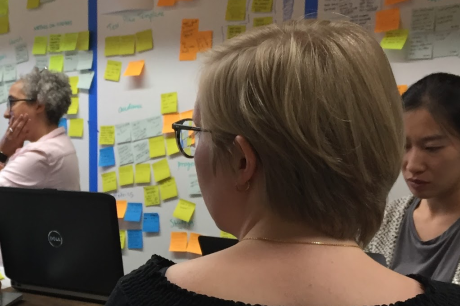

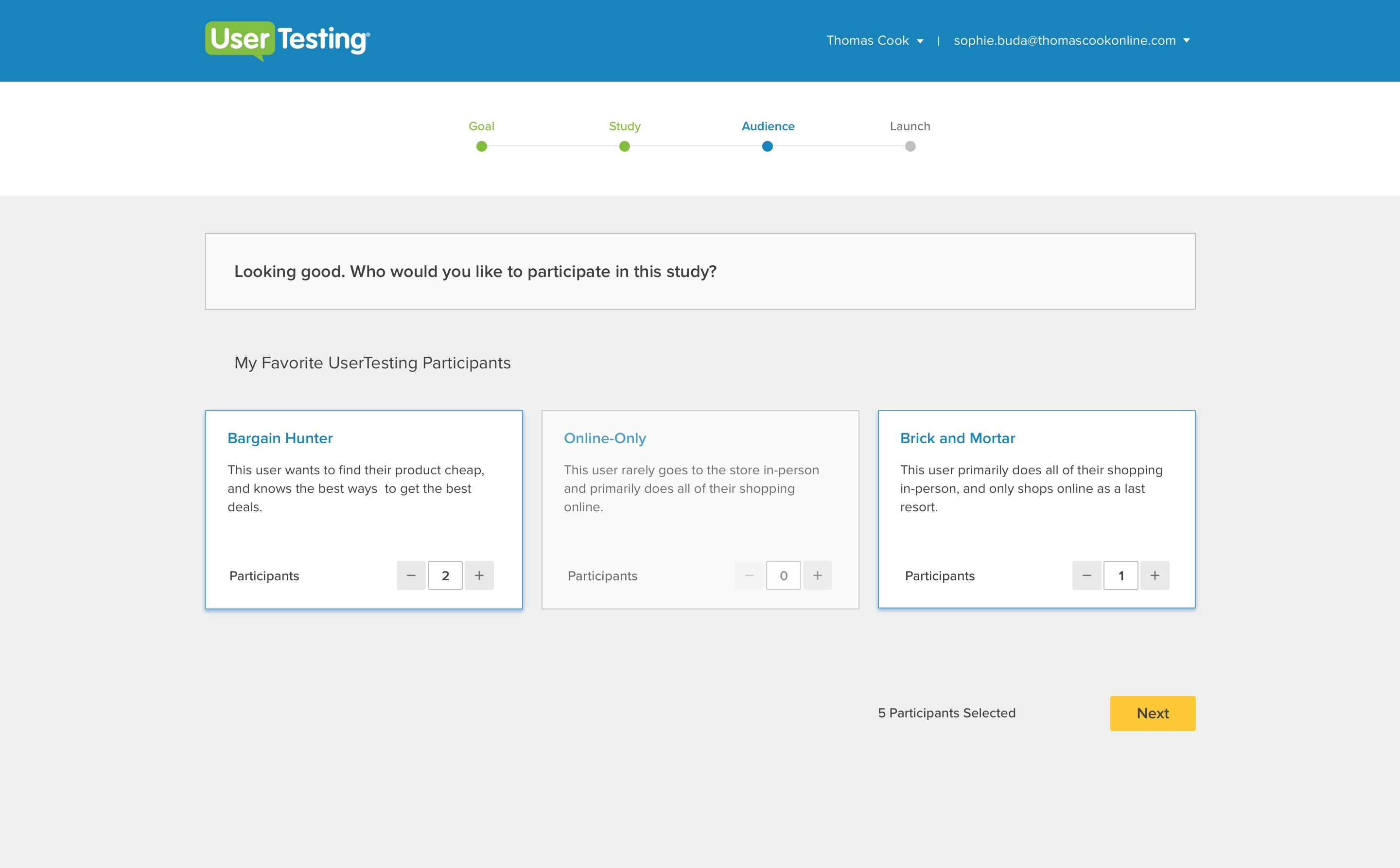

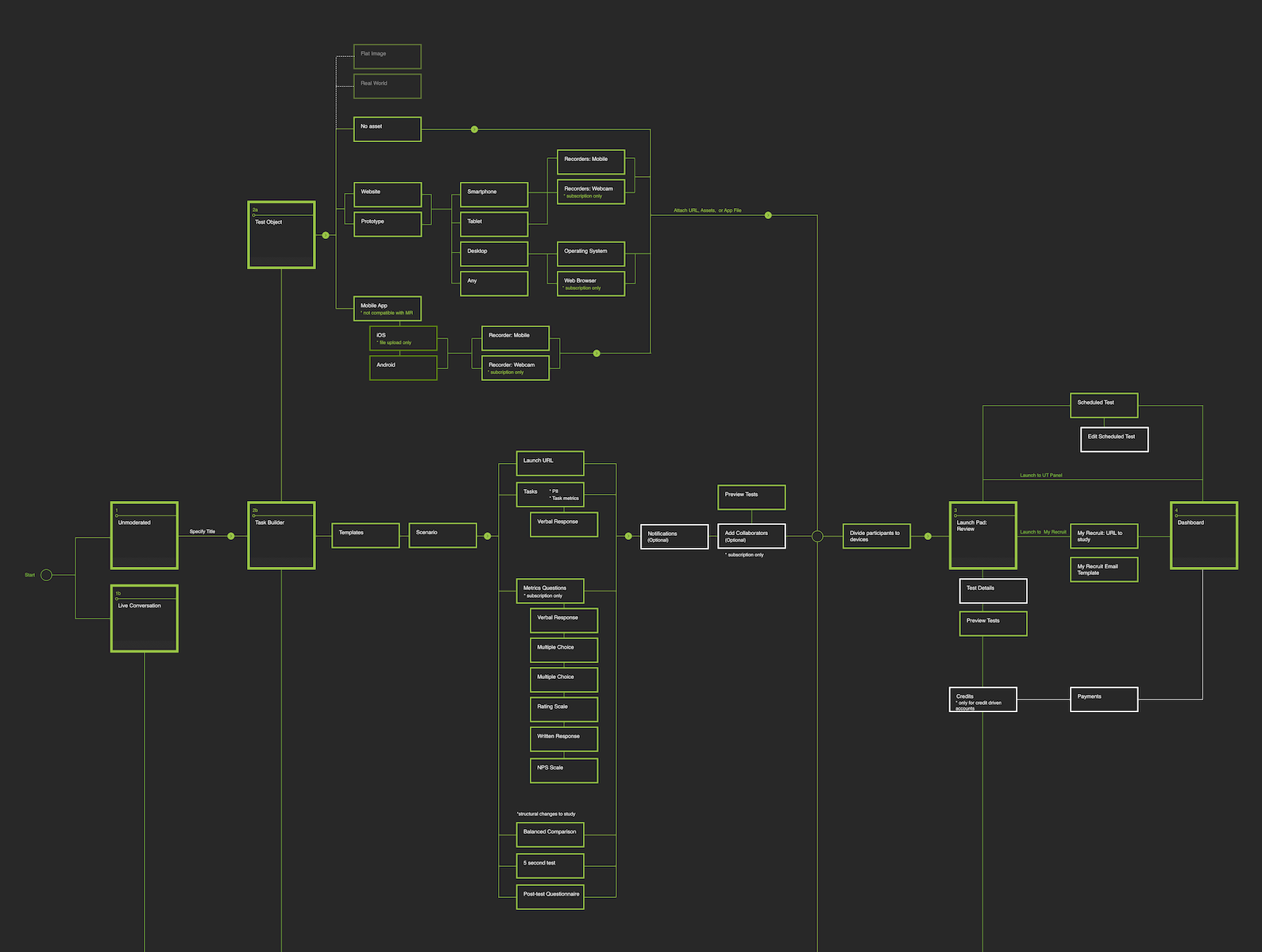

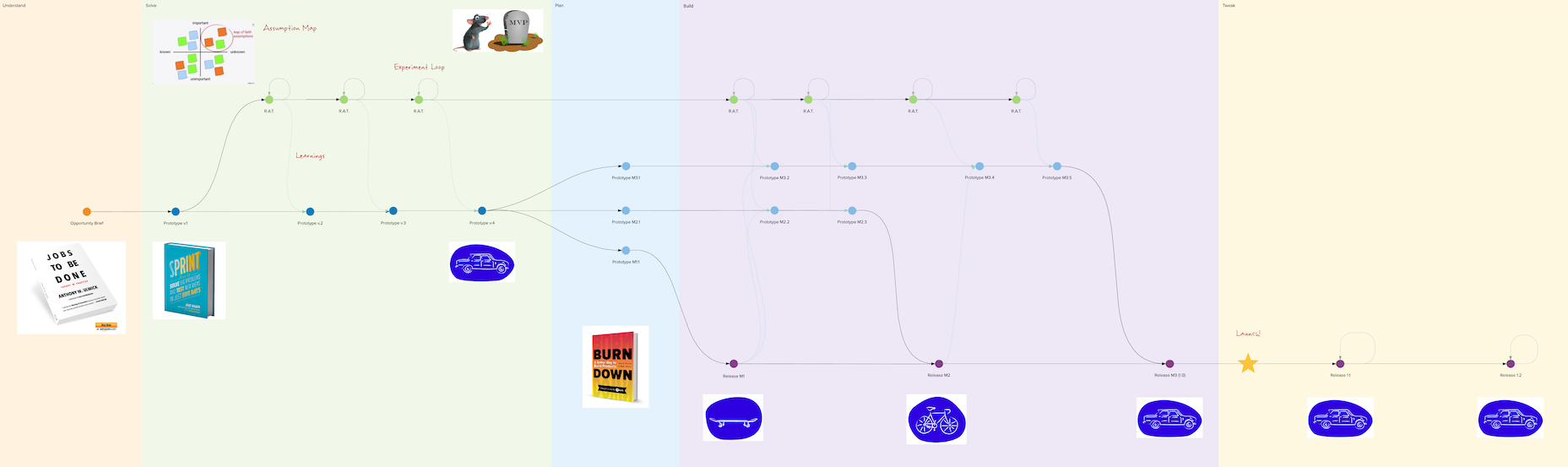

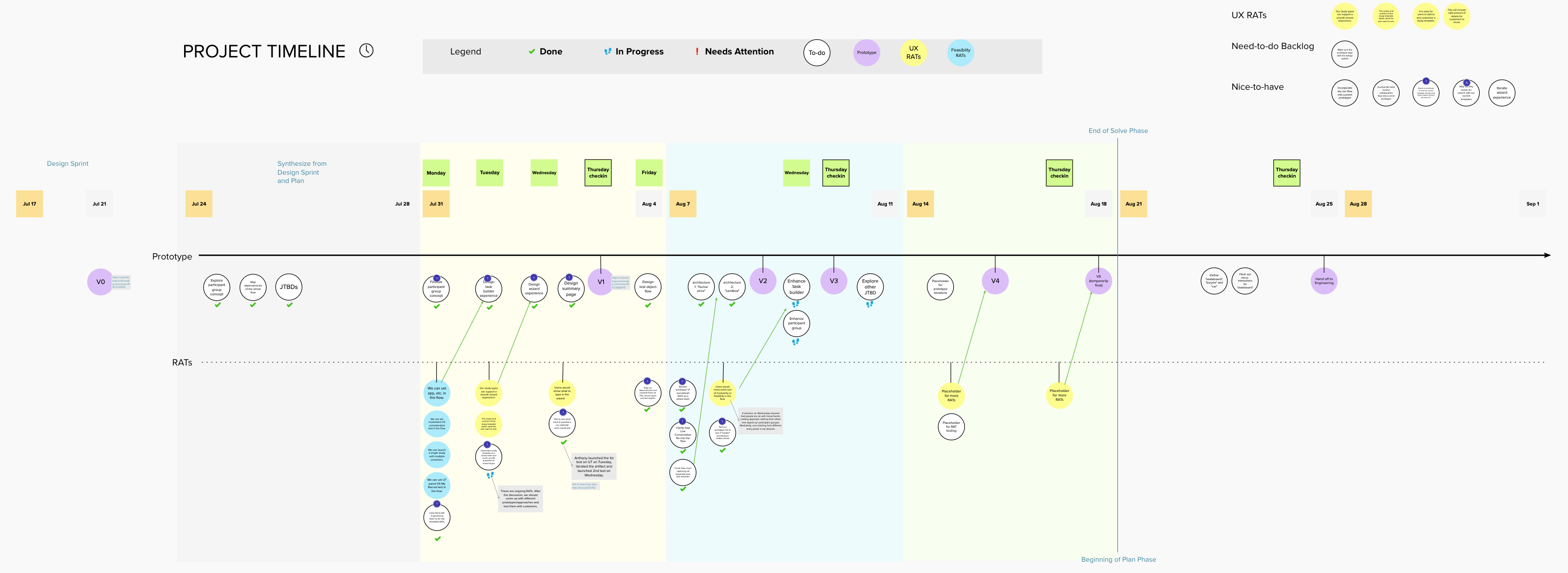

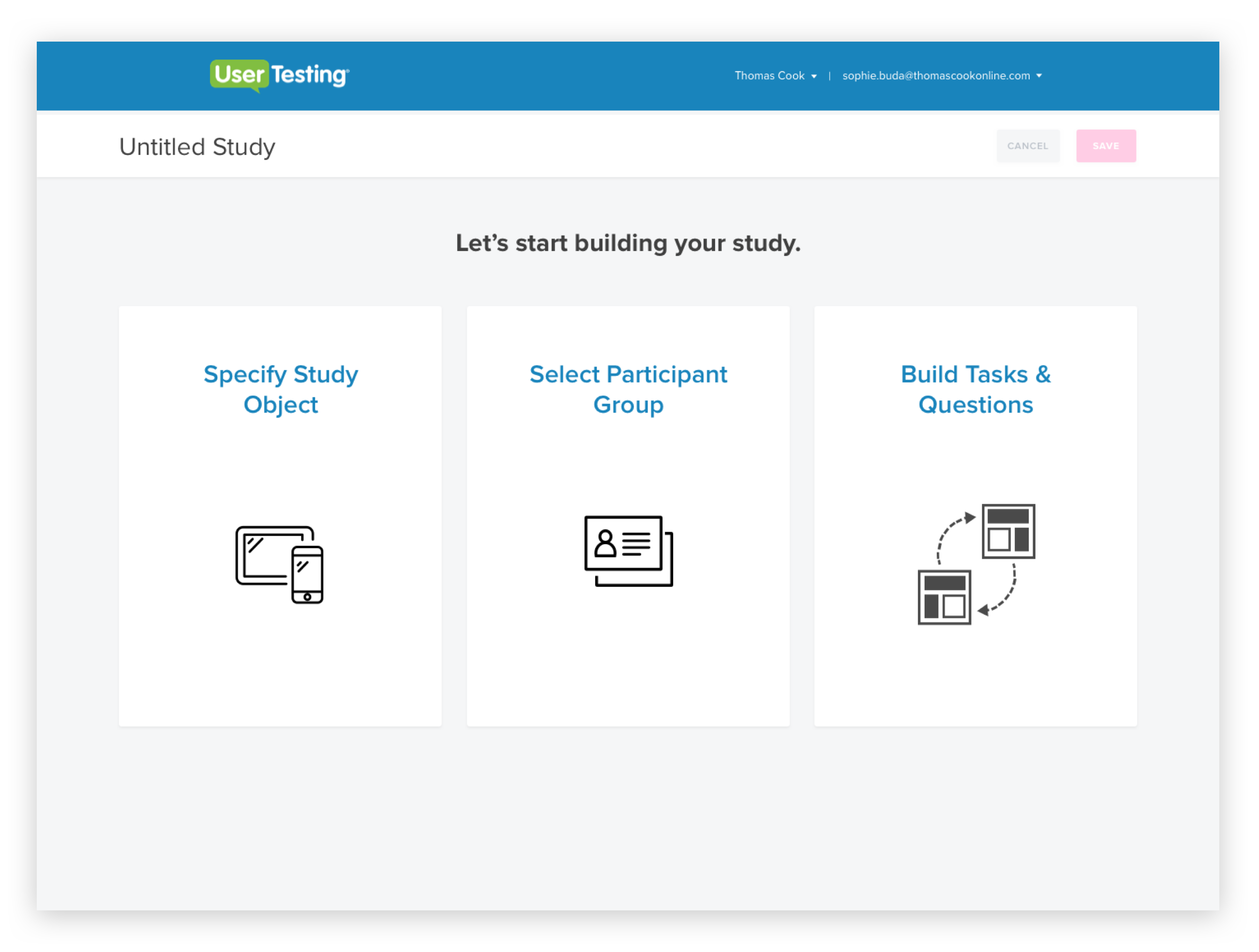

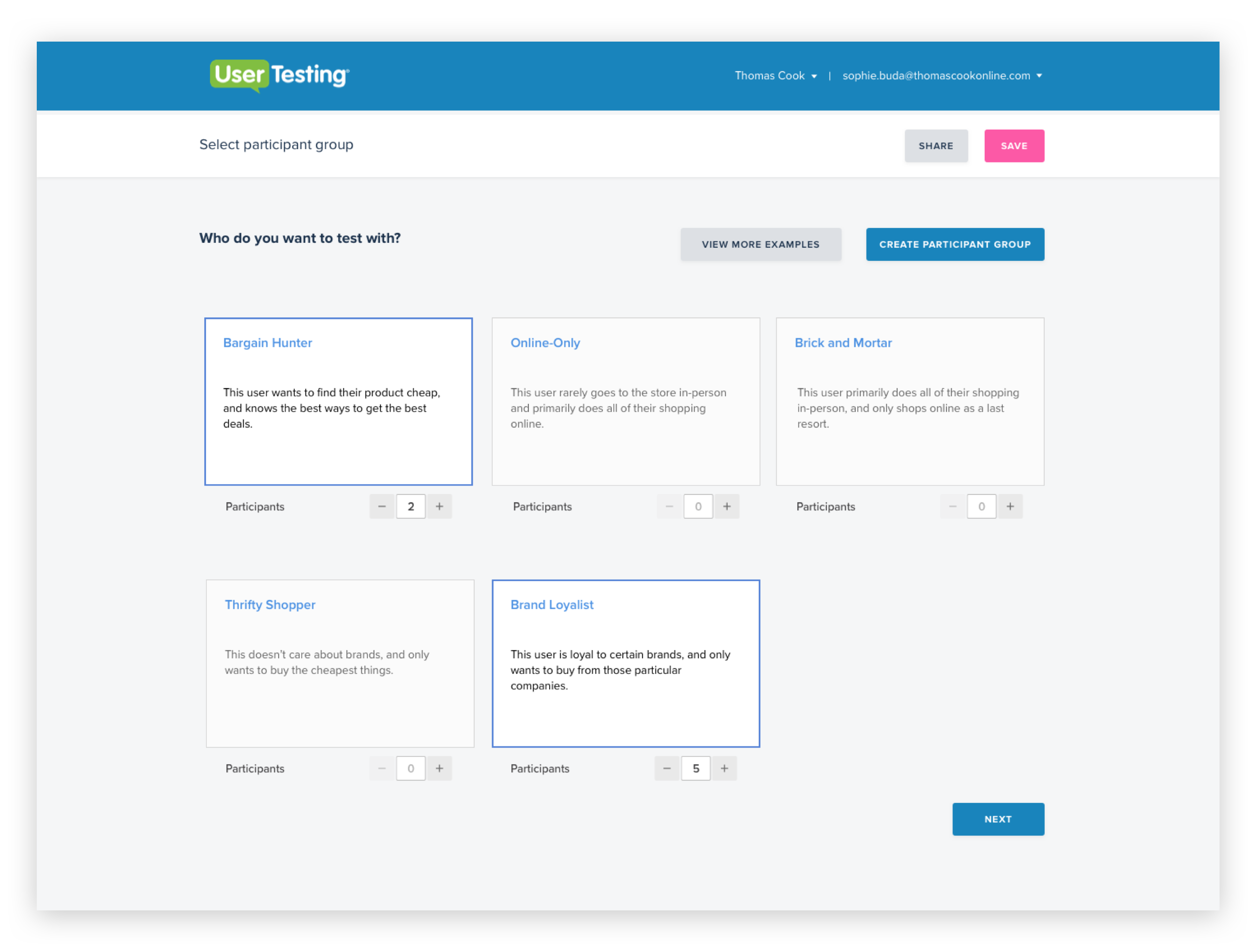

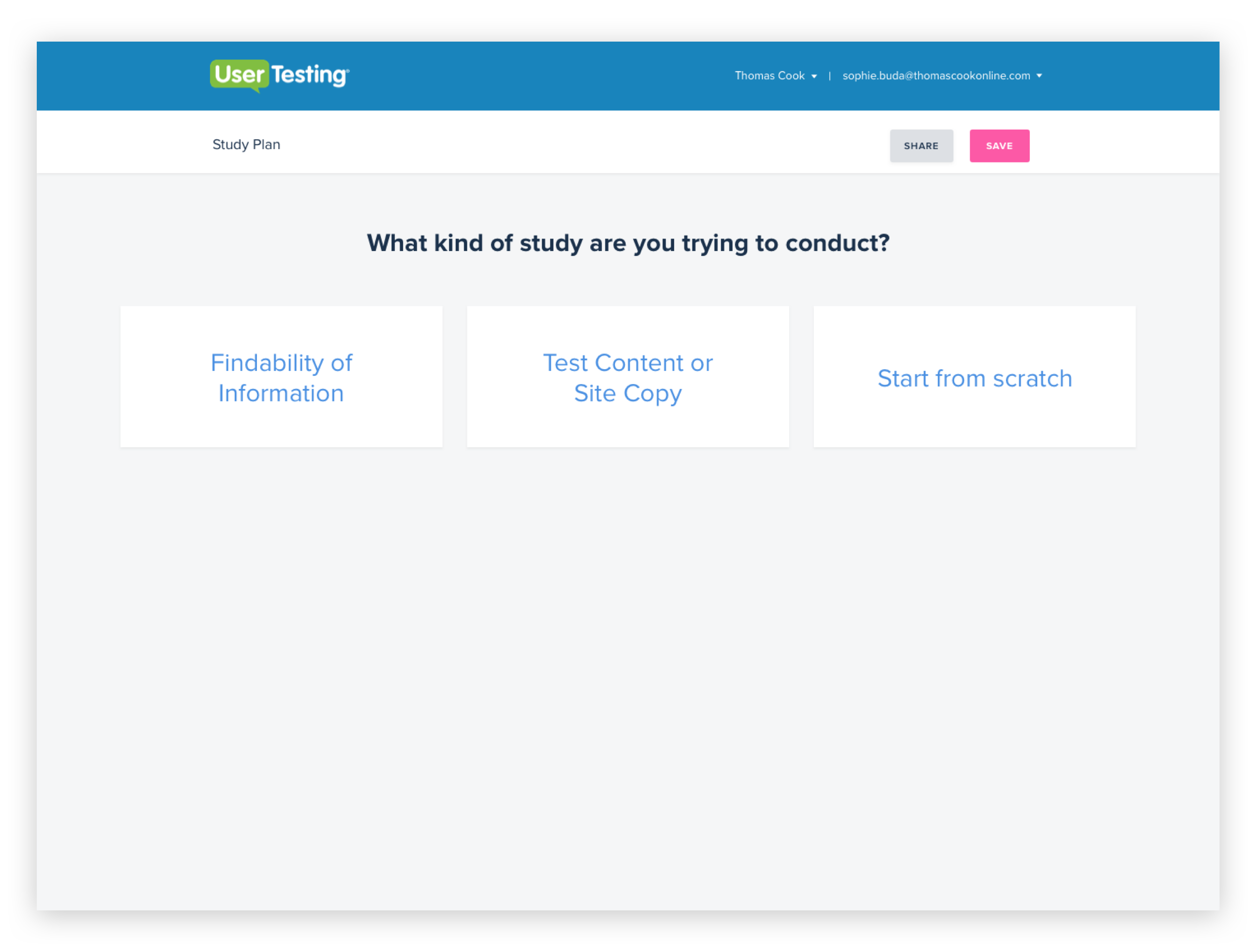

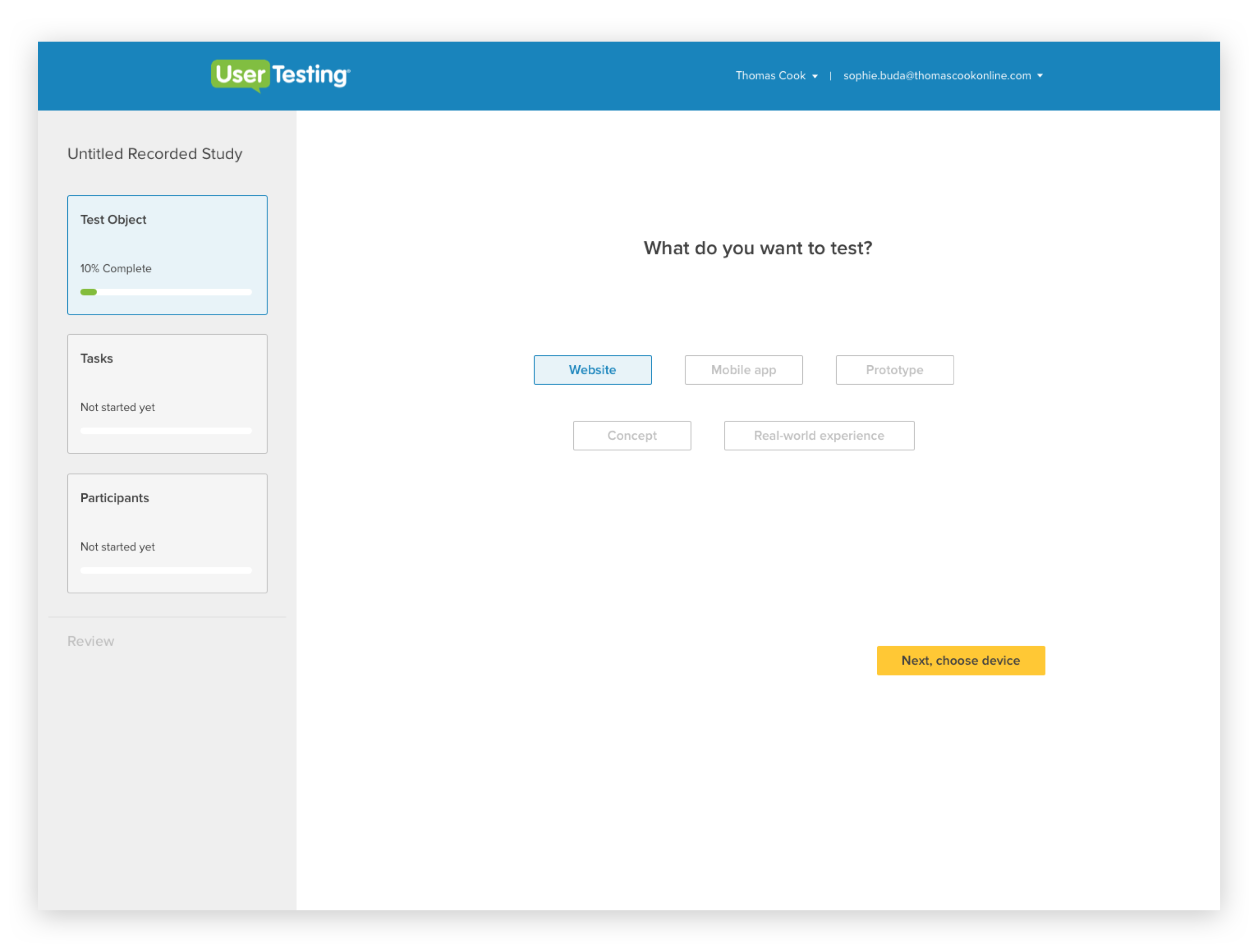

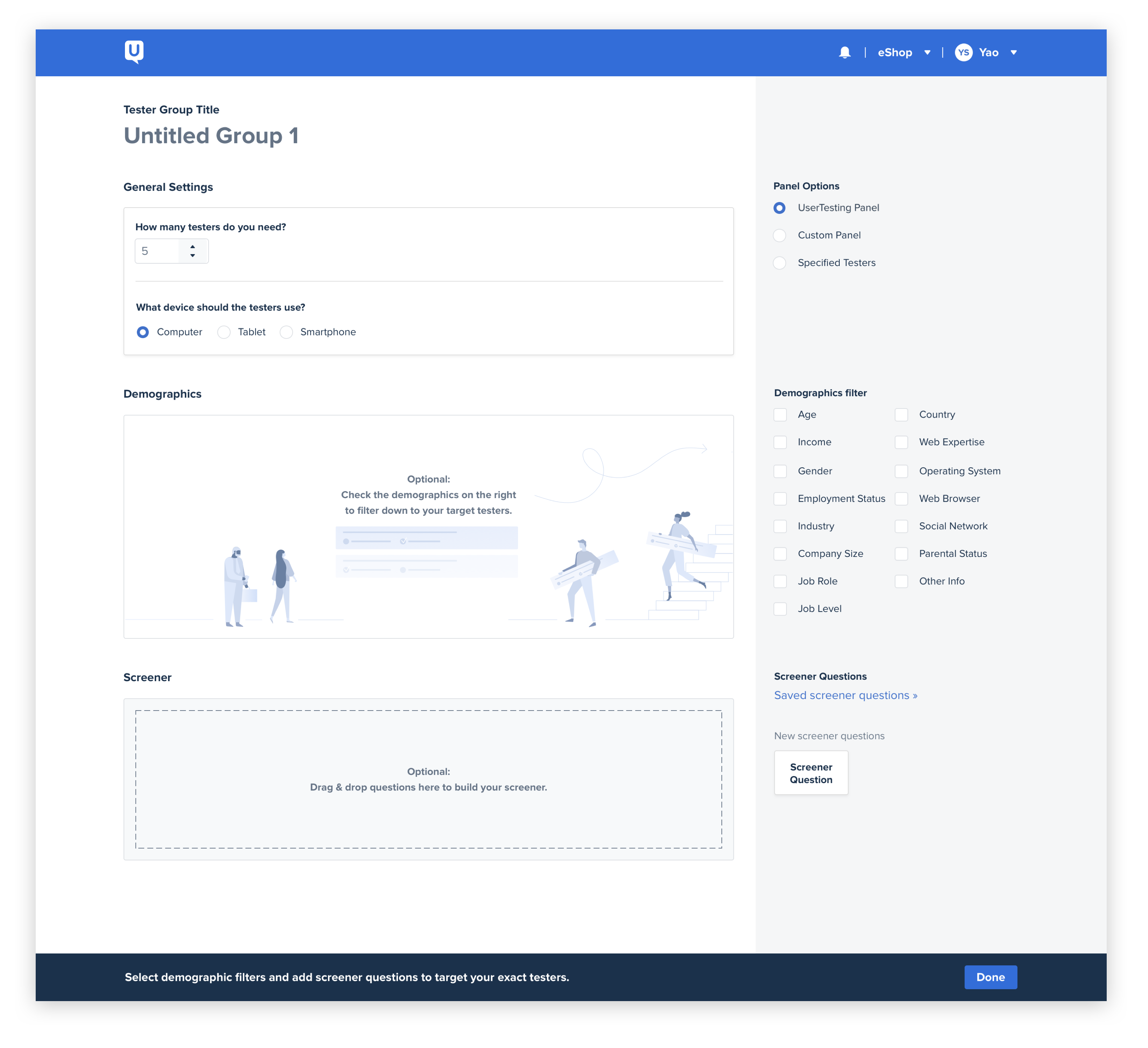

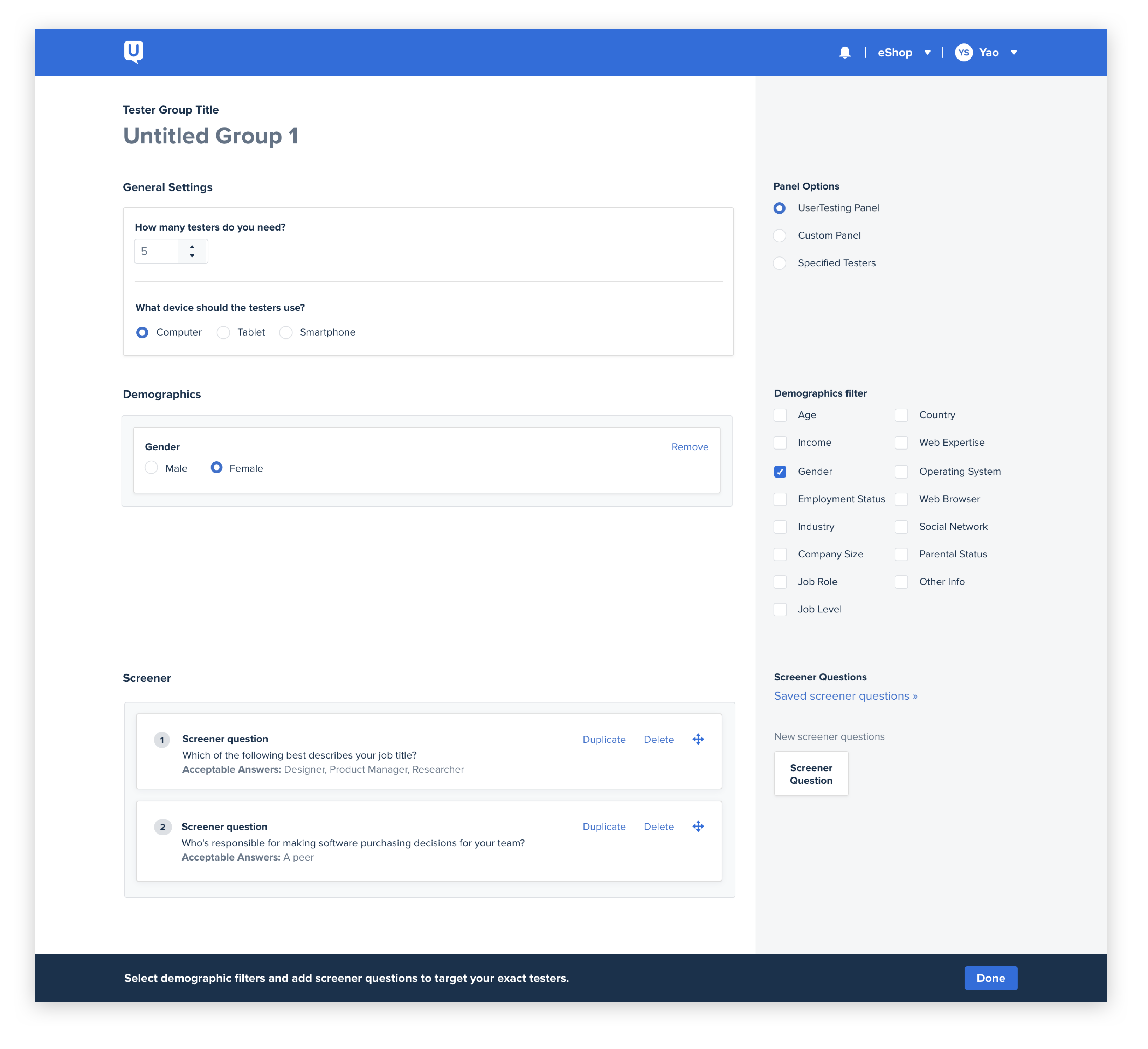

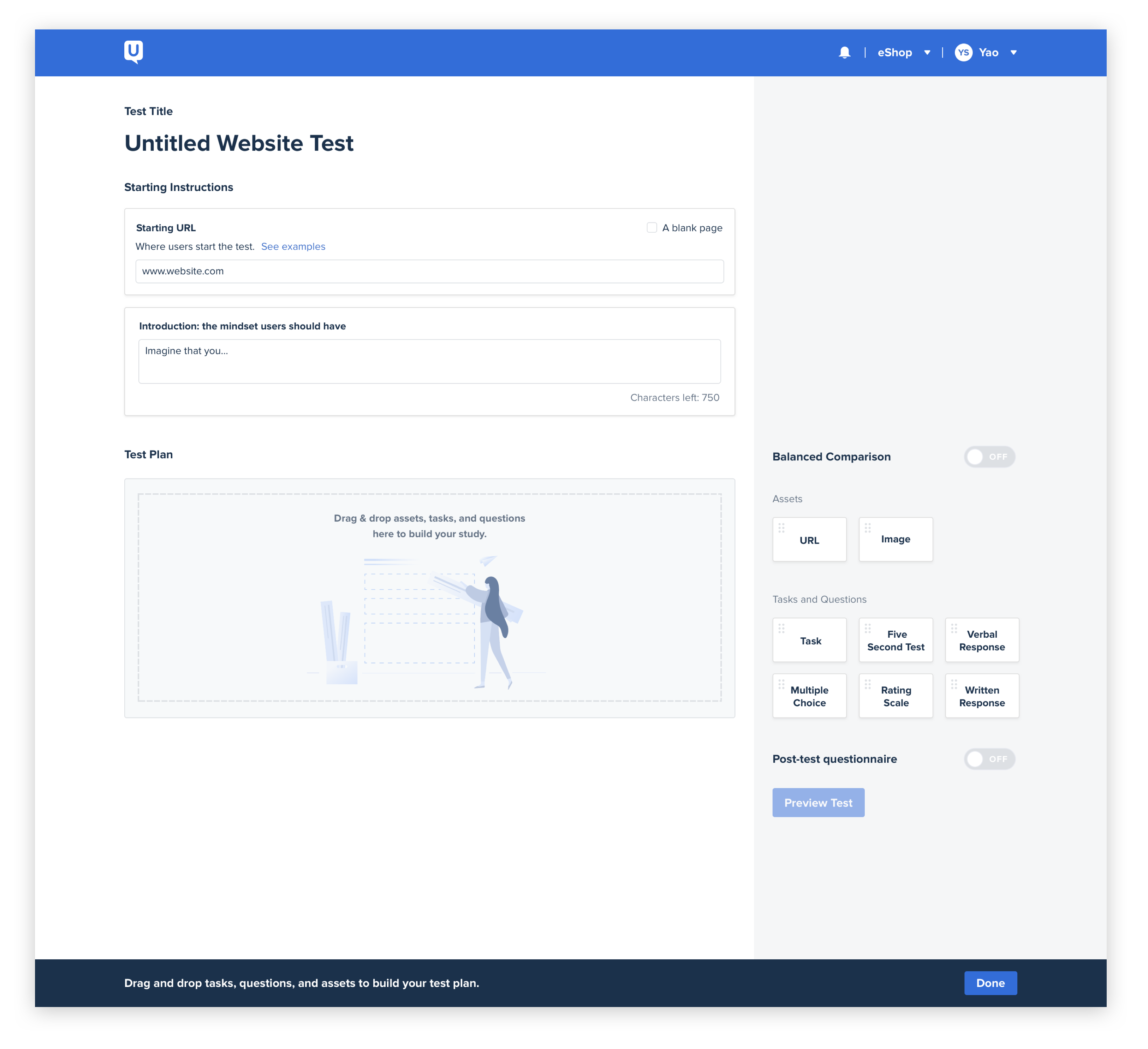

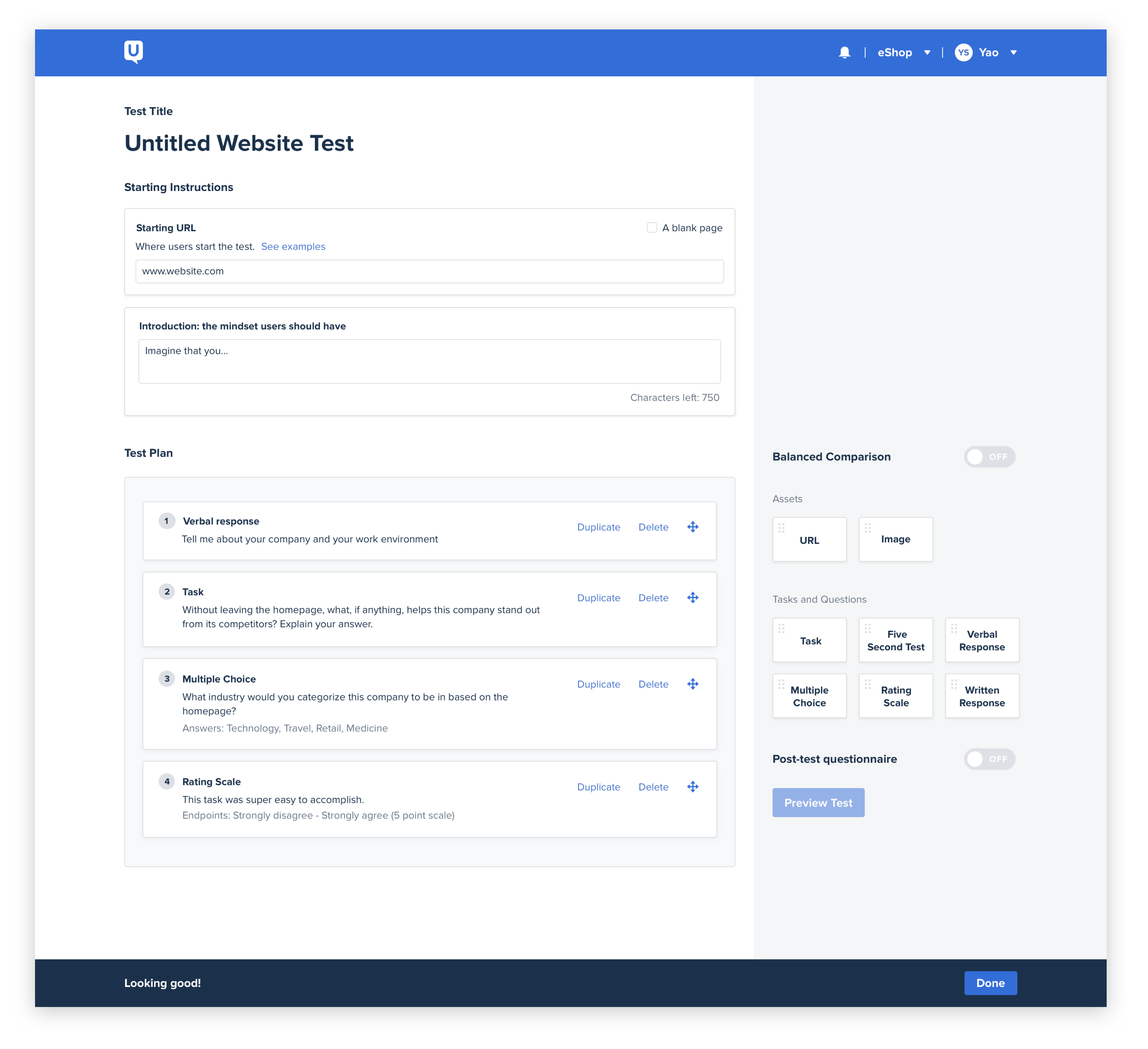

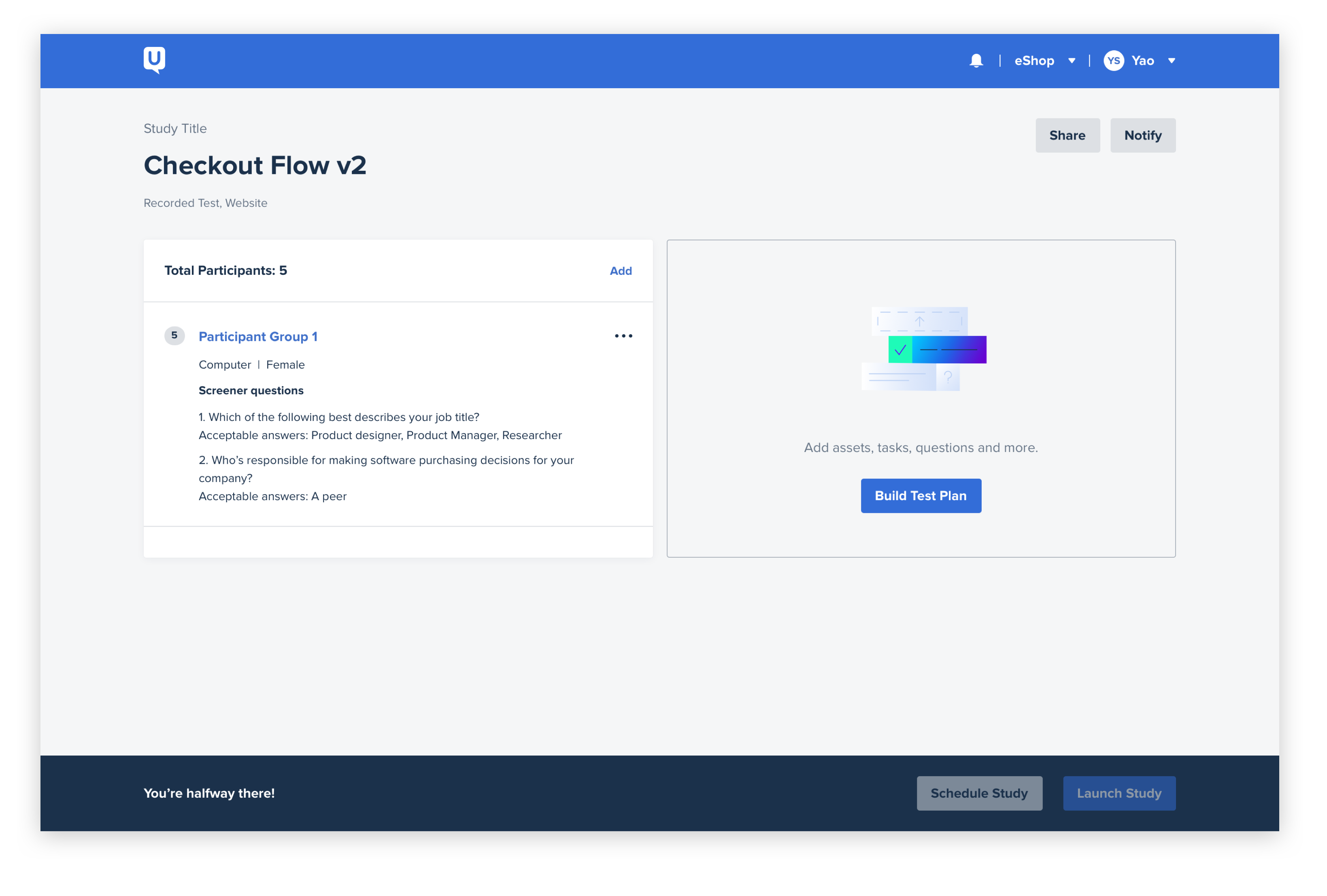

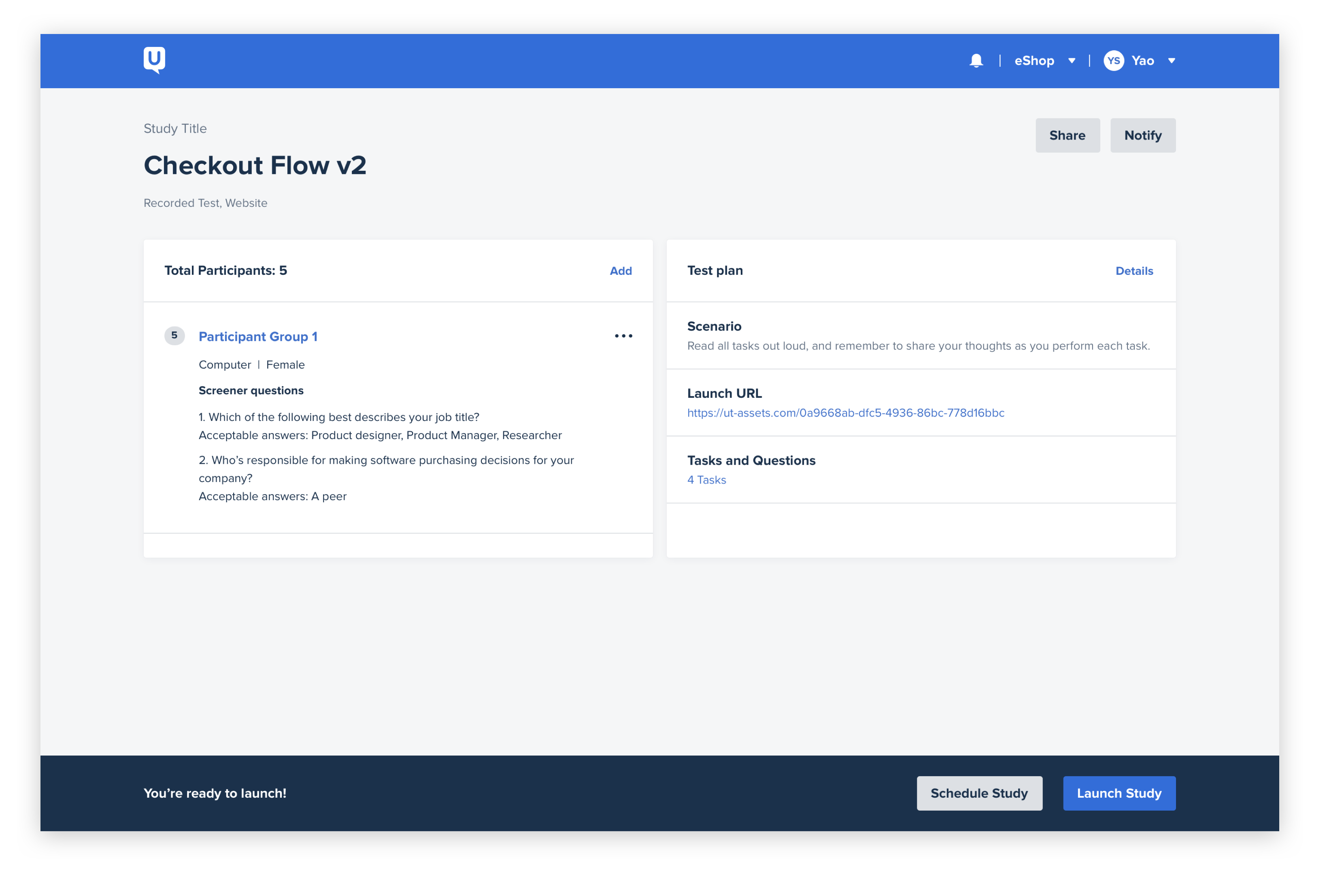

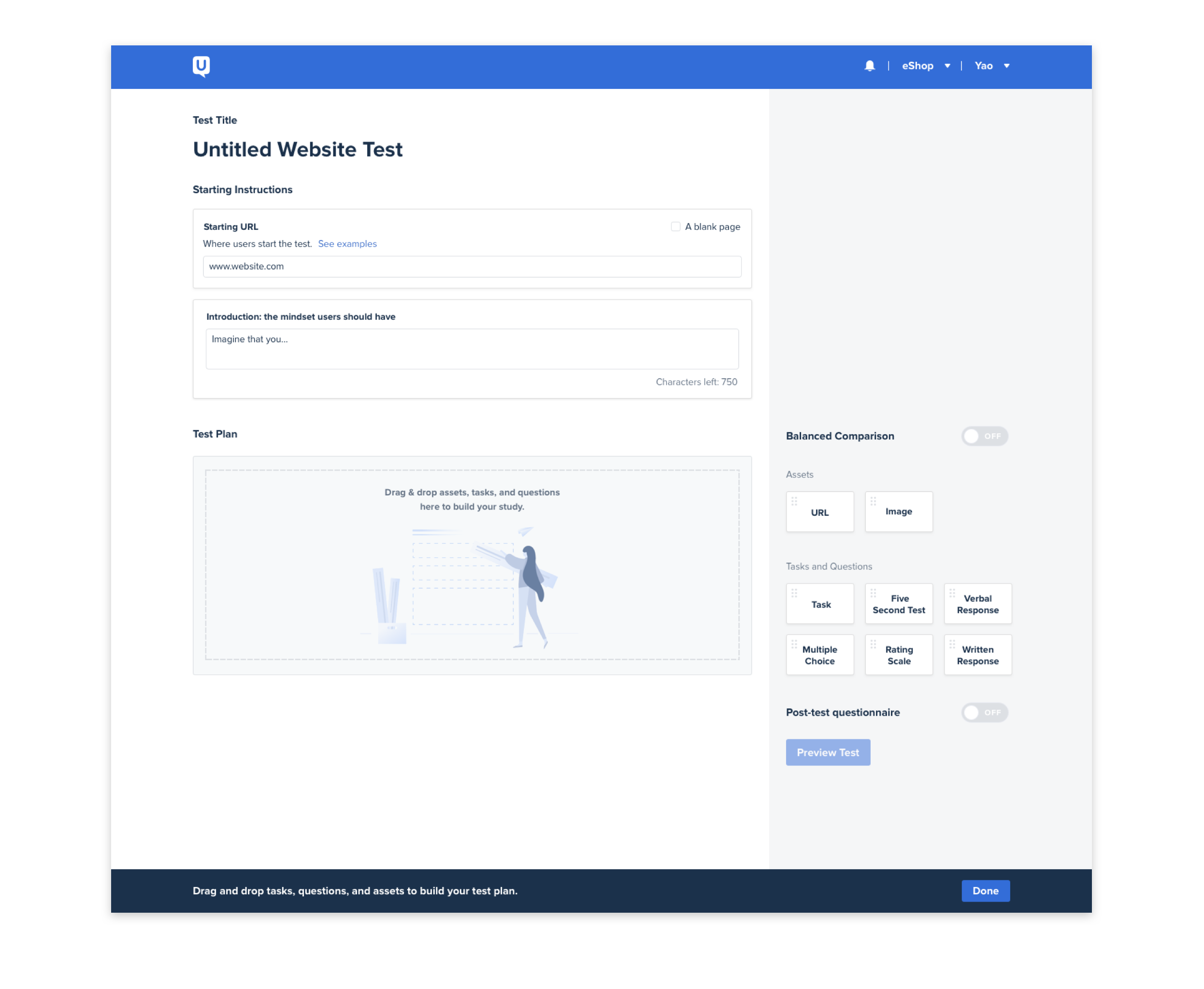

Paired with another product designer, I worked on the biggest chunk of the redesign project, the “study launcher” redesign. This is the product area where customers build their test plans and distribute it to their target audience. Our cross-functional “product developers” followed the burndown framework to help us come to the right solution. The process at its core was a design-thinking and customer-centric approach to how we would design and build an experience that our customers would love. Our goal was to make the experience easier for the research novice users and to create an experience that can catalyze research amongst other team members outside of the traditional UX practitioner. After a year of development, we were able to launch the product and received both praise from our internal partners and from our customers who have tried it out.