As a SaaS company, UserTesting faces a common real-world business problem: the end users (UX research and design teams) are not the buyers (Head of Design, Head of Research, CTO…). How do we increase UserTesting’s perceived value from buyer’s point of view, as well as actual customer value?

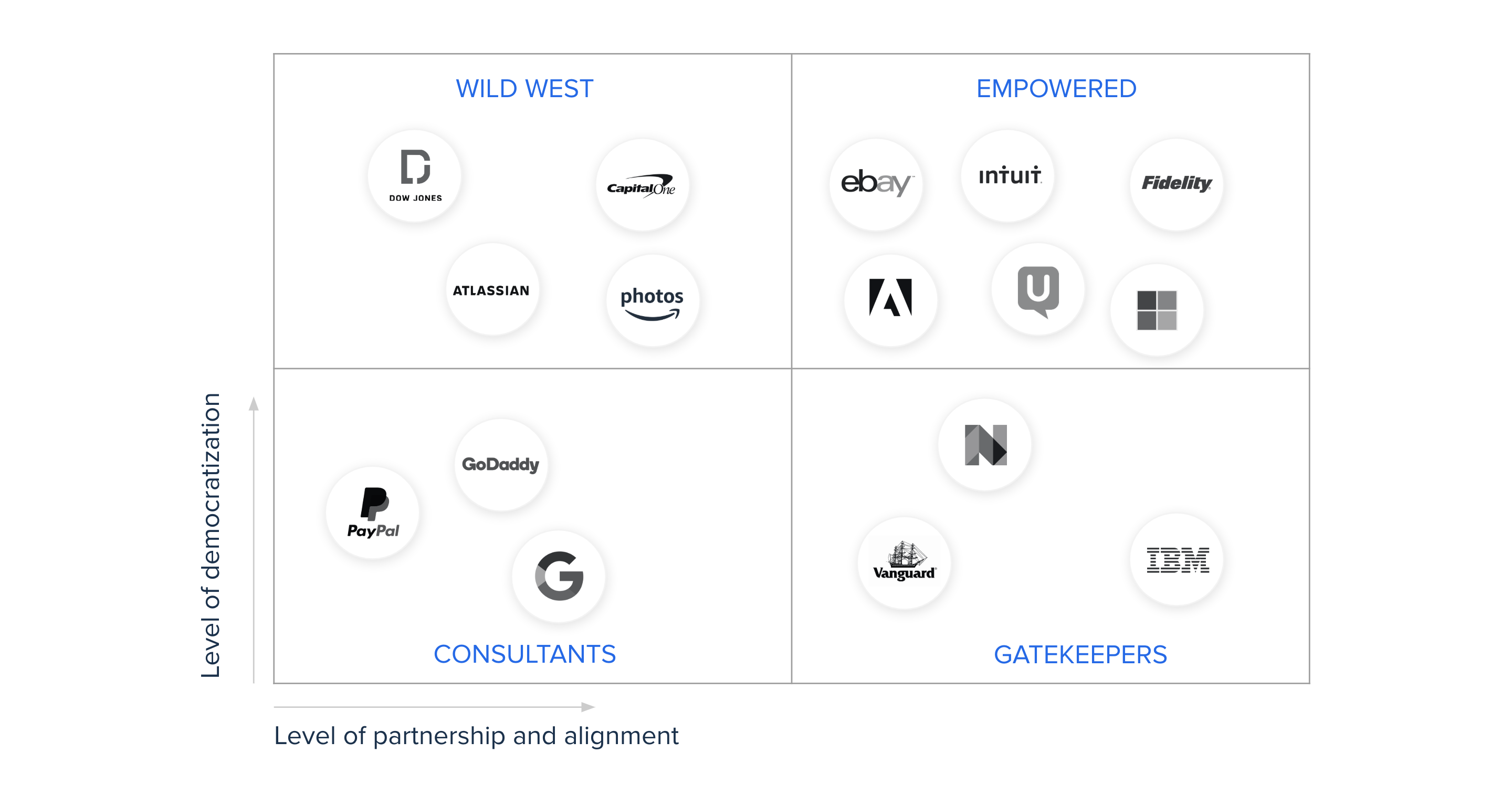

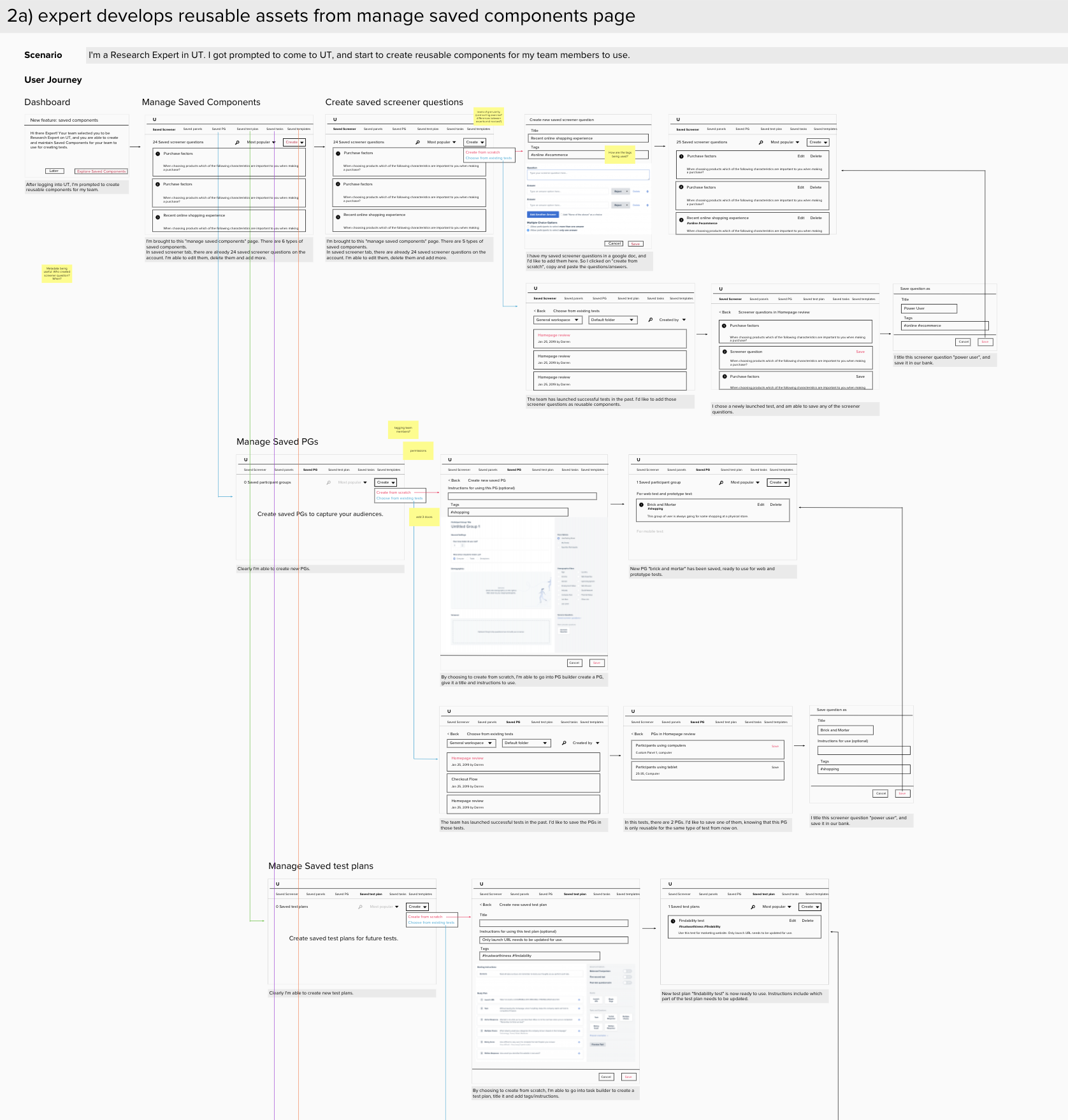

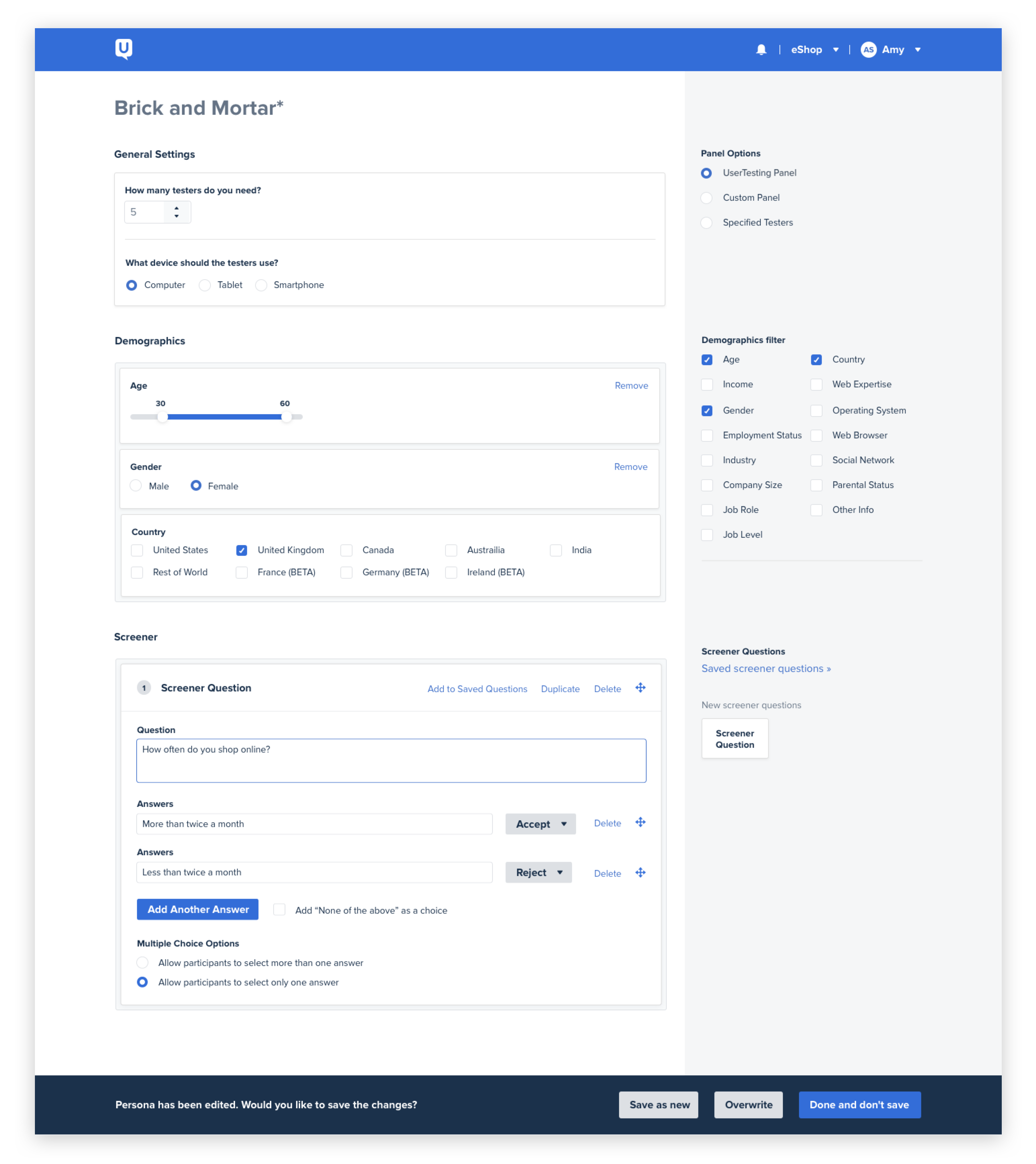

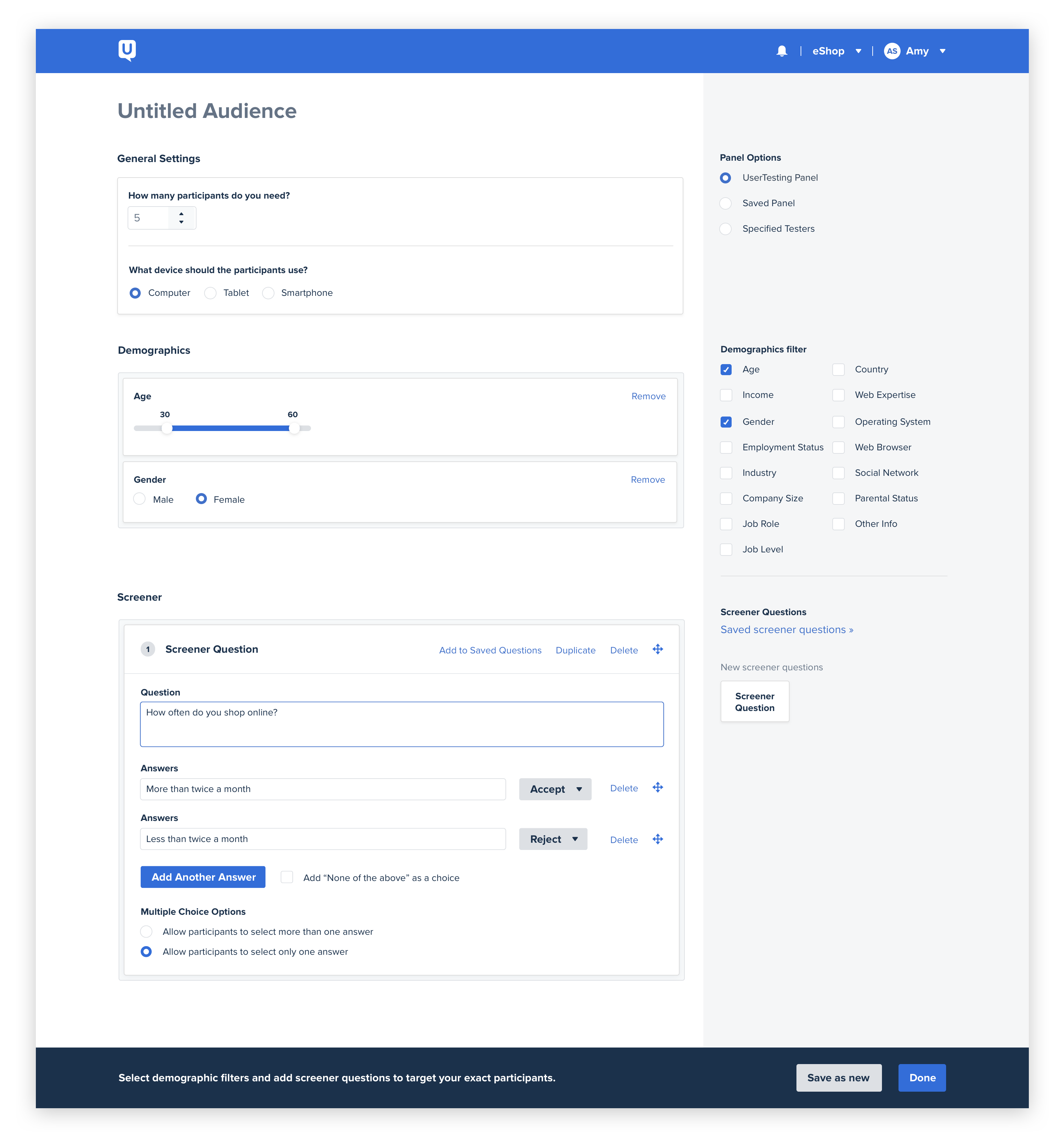

After getting input from our customers, we see a clear trend, where end-users care a lot about the quality of their own generative research and evaluative research; while buyers care a lot about operationalizing and democratizing research, as a way to ensure UX quality.